HCI (Human Computer Interaction)

The HCI (Human Computer Interaction) is a term used to describe a peripheral used by a human to access the controls of a game. This can be in the form of a controller, remote or other peripheral.

I will refer to a couple of terms throughout the article, so I will cover what they mean here, so that you understand what I talk about.

The ergonomics of a controller is pretty much how comfortable it is to hold - how well it fits into a pair of hands. A controller with good ergonomic design will be intuitive to the player, they will feel what they think they need to press. The buttons need to be able to be easily pressed in union, and as combinations, and the controller needs to not only be comfortable, but needs to be comfortable for extended periods of time and stress!

The button configuration of a controller is simply the location of the buttons on the controller. The buttons need to be able to be pressed quickly and easily. In most games these days, you can assign buttons to different actions and functions, so a controller needs to tend to all of this/

So, I will now go into the history of the HCI that has been introduced alongside video game consoles.

1970s

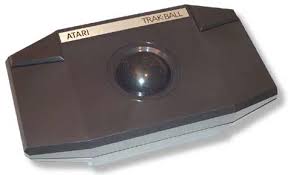

In the 1970s, the Atari 2600 was released. The Atari 2600 had multiple input devices, such as the Joystick, the Trakball, paddles and driving controllers. The paddles and driving controllers were essentially the same aesthetically, but each allowed for a different control mechanism designed for each different game. The trakball (image left) was a controller with a spinning ball in the center.

In the 1970s, the Atari 2600 was released. The Atari 2600 had multiple input devices, such as the Joystick, the Trakball, paddles and driving controllers. The paddles and driving controllers were essentially the same aesthetically, but each allowed for a different control mechanism designed for each different game. The trakball (image left) was a controller with a spinning ball in the center.

1980s

1980s

In the 1980s, the Atari's successor was released - the Atari 5200.

The 5200 had a joystick controller again, and it also had a revision of the trakball again. The controller was revolutionary at this time, becaue it allowed the user to pause the game that they were playing - a rare novelty of the time, which was a large selling point!

The Nintendo NES was also released in the 1980s, which saw possibly the biggest revolution in controllers - even seen in pretty much all controllers these days. The NES controller featured a directional button pad - up, down, left and right.

The Nintendo NES was also released in the 1980s, which saw possibly the biggest revolution in controllers - even seen in pretty much all controllers these days. The NES controller featured a directional button pad - up, down, left and right.

Another feature of the NES controller, was the button layout. a 'start' and 'select' buttons, and the 'A' and 'B' buttons. These also changed the face of controllers for the future to come, as you will see later on.

The Sega Master System was also released in the 1980s. The Master System also featured the very squared-off controllers that the NES had, and also had a very similar button layout.

In the later 1980s, Sega released their Mega Drive/Genesis. This was a console to feature an ergonomic controller - it fits the hands of the player, and was comfortable to hold - for the first time in console history. Of course, this controller also had a very similar button layout to the NES and Master system, with the D-Pad and the buttons on the right.

In the later 1980s, Sega released their Mega Drive/Genesis. This was a console to feature an ergonomic controller - it fits the hands of the player, and was comfortable to hold - for the first time in console history. Of course, this controller also had a very similar button layout to the NES and Master system, with the D-Pad and the buttons on the right.

In my previous posts, I rambled quite a lot about the success of the Game Boy, and of course, I will continue here!

The Game Boy was released in 1989, and it was the best handheld console - hands down. It was made by Nintendo, and featured their same button layout as the NES. If you look at it, it is exactly the same, but the buttons are angled for more comfort. It seems this button layout will be continued by Nintendo for consoles to come.

In my previous posts, I rambled quite a lot about the success of the Game Boy, and of course, I will continue here!

The Game Boy was released in 1989, and it was the best handheld console - hands down. It was made by Nintendo, and featured their same button layout as the NES. If you look at it, it is exactly the same, but the buttons are angled for more comfort. It seems this button layout will be continued by Nintendo for consoles to come.

1990s

In the early 1990s, one of the most recognizable and successful consoles of all time was released - the Playstation. The Playstation had an even more comfortable and ergonomic controller than seen before, it was nice to hold, and had very good button placement. As you see, it had the same D-pad and 'start'/'select' buttons as controllers before had. It also had the same buttons on the right, but two additional.

The Playstation had shoulder buttons, which was a new feature on controllers. Could this feature take off?

The 1990s also saw the release of the Nintendo N64, which had a very odd looking controller indeed. The N64's controller was oddly comfortable, and again, it featured all of the similar buttons from other controllers. Alongside these, it also featured two more buttons on the right, and a basic joystick in the middle. The N64 also had the first vibration functionality, achieved through an add-on pack.

The 1990s also saw the release of the Nintendo N64, which had a very odd looking controller indeed. The N64's controller was oddly comfortable, and again, it featured all of the similar buttons from other controllers. Alongside these, it also featured two more buttons on the right, and a basic joystick in the middle. The N64 also had the first vibration functionality, achieved through an add-on pack.

After the N64, Sony released the Playstation Dualshock controller, which had two little thumb-sticks/analog sticks. Other than these, it remained the same as the original PlayStation controller.

After the N64, Sony released the Playstation Dualshock controller, which had two little thumb-sticks/analog sticks. Other than these, it remained the same as the original PlayStation controller.

In the late 1990s, Sega released their Dreamcast console. This featured a controller with the first ever screen on, although it was very hard to see and utilize, as it was a very basic liquid crystal display.

2000s Onwards!

In the very early 2000s, Microsoft took a shot at the market with their Xbox. Their controller had thumb-sticks too, but in a much more ergonomic place. Controllers from this point on became much more comfortable to hold, and they began to nail button placements.

In the very early 2000s, Microsoft took a shot at the market with their Xbox. Their controller had thumb-sticks too, but in a much more ergonomic place. Controllers from this point on became much more comfortable to hold, and they began to nail button placements.

Nintendo also released a console in the early 2000s, which was their GameCube. The GameCube controller had thumb-sticks in the same place as the Xbox, and featured a very similar button layout to the Xbox controller too.

In the early 2000s, Sony released their PlayStation 2. Although there was nothing revolutionary about their controller as such, they also released their EyeToy. The EyeToy was the first motion-controlled peripheral in games consoles. It was a little camera that you would stand in front of, and interact with on-screen graphics.

Sony and Nintendo released handheld consoles in the early 2000s - the PSP and the DS. The Nintendo DS featured a touch-screen input, which was a big feature at the time, whereas the PSP had a high-resolution screen, although not touchscreen. both of these consoles were revolutionary in their own different ways.

2005 saw the release of arguably the best controller of all time. In my opinion, the Xbox 360's controller was perfect. It fits hands exceptionally well, and was just incredibly comfortable to hold for lengths of time.

2005 saw the release of arguably the best controller of all time. In my opinion, the Xbox 360's controller was perfect. It fits hands exceptionally well, and was just incredibly comfortable to hold for lengths of time.

The Xbox 360 also had wireless controllers, which were made very easy to connect and use, which was a new feature of the time.

Sony's PlayStation 3 controller looked pretty much exactly the same as the PlayStation and the PlayStation 2's controller, although the PS3's controller had wireless functionality, and a gyroscope in (used with certain games), called SIXAXIS.

Sony's PlayStation 3 controller looked pretty much exactly the same as the PlayStation and the PlayStation 2's controller, although the PS3's controller had wireless functionality, and a gyroscope in (used with certain games), called SIXAXIS.

Nintendo released their Wii in 2006, which had a very state-of-the-art controller, being a motion controller, and fully wireless at the same time. The Wii also had an optional game pad controller, if you wanted to plug it in.

Nintendo released their third revision of the DS in 2008. This was the DSi. The DSi featured a front-facing camera, and a revised shape. The DSi was a big game-changer, as it featured this camera.

Alongside the release of the game Mario Kart Wii, a Wii remote accessory was released - the Wii Wheel. The Wii wheel was possibly the dumbest gimmick that pretty much every Wii owner bought into. They were relatively expensive for a plastic shell, and they didn't really allow the player to do anything different. Even though they were relatively gimmicky, they worked, and they did sell very well for Nintendo. Hats off to them.

In 2009, Nintendo released yet another Wii accessory. It is apparent by now that it is

Nintendo's style to release something and polish it over the years to saturate its sales. In 2009, they released the Wii MotionPlus. The MotionPlus was a little add on to the controller, that was literally just a gyroscope. The gyroscope allowed players to add things such as topspin and backspin to a game of virtual tennis and so on.

In 2009, Sony released possibly the most forgotten about revision of the PSP of all time. The PSP Go was bad in sales, but it did offer very comfortable controls and button layout.

by 2010, Microsoft took a shot at the motion-control market. They released their Kinect. The Kinect did sell well, as it was bundled with consoles a lot, but people did not really get on with it well. The Kinect was reported to just not work well on the 360, and even when it did, its not really what people wanted. Maybe this is because the majority of people that owned an Xbox 360 were serious gamers, and motion controls worked better in a family console, such as the Nintendo Wii?

by 2010, Microsoft took a shot at the motion-control market. They released their Kinect. The Kinect did sell well, as it was bundled with consoles a lot, but people did not really get on with it well. The Kinect was reported to just not work well on the 360, and even when it did, its not really what people wanted. Maybe this is because the majority of people that owned an Xbox 360 were serious gamers, and motion controls worked better in a family console, such as the Nintendo Wii?

In 2011, Nintendo released a whole new revision to their DS, it was the 3DS. The 3DS offered a semi-three-dimensional experience to the user, which was a huge unique selling point. It did sell very well for this. The 3DS was also the first DS to have a thumb-stick. Nintendo also released a case for the 3DS which allowed the user to use a second thumb-stick.

In 2011, Nintendo released a whole new revision to their DS, it was the 3DS. The 3DS offered a semi-three-dimensional experience to the user, which was a huge unique selling point. It did sell very well for this. The 3DS was also the first DS to have a thumb-stick. Nintendo also released a case for the 3DS which allowed the user to use a second thumb-stick.

This year (2015) saw the release of Nintendo's newest revision of the DS. It is the New 3DS. The New 3DS has a few refinements made to the body of it, but its new selling point is the 'stable 3D', which is a much better 3D display, where the player doesn't have to keep their head in a specific place to see the 3D parts of the display.

This year (2015) saw the release of Nintendo's newest revision of the DS. It is the New 3DS. The New 3DS has a few refinements made to the body of it, but its new selling point is the 'stable 3D', which is a much better 3D display, where the player doesn't have to keep their head in a specific place to see the 3D parts of the display.

In 2012, Nintendo released a new Wii console - The Wii U. The Wii U's controller had a large screen on, where the player can mirror the display. The rest of the design actually resembles the 3DS (with circle pad case) in my opinion, even if not, it definitely stays true to the usual Nintendo button layouts.

In 2013, Microsoft released the Xbox One. The Xbox One's controller is very much similar to the Xbox 360's controller, with pretty much the same button layout and features. I own an Xbox One and an Xbox 360, and I can say that the Xbox One's controller has its pros and cons. The Xbox One's controller has very nice to use thumb-sticks, with ridges on around the perimeter of them for more grip, it also has rumble triggers, which I believe gives much better Haptic feedback to the player. It also has a D-pad which actually works (compared to that of the 360 controller). The features that annoy me about this controller, is that he guide button is in an awkward place to press, and the select and start buttons have been renamed and have become confusing.

Also in 2013, Sony released the PS4. The PlayStation 4's controller had a lot of new and innovative features, such as the touch pad, and the light bar at the back (for motion controls). A bad thing about the controller, is how 'flimsy' it can be. The controller's thumb-stick rubber covers come off really easily.

With many of these controllers, the next revision of them aren't too different. The company will conduct a UCD survey (User Centered Design), where they will ask a group of people how they felt about their previous controller, and what functionalities need building on, and if there are any new features that they would desire. By conducting UCD, the companies can get a very polished and improved controller for their next console. The success of a console is very much reliant on the ergonomics of a controller. A UCD survey would need to take into consideration many different factors, such as:

- What the gamer has to do.

- Can the controller tend to all of the needs of different genres of game?

- Who will be the main audience of the controller.

- What do gamers need from the controller?

A successful UCD test will be taken on a range of different people, with a range of different interests, game playing styles, and video gaming experience.

The portability is a big factor to consider with controllers too, if a controller is wireless, it has to connect to a different console with ease, it is easy to get impatient if you're waiting to play a game for ages, because your controller won't connect. A controller has to be able to be easily disconnected, and taken to another place. The portability of a handheld console is also important, the batter life of a portable console also has to be taken into consideration.

So, that is my section on HCI, please stay tuned for the rest of this article :)

Matt